Transfer Learning

Selvarajah Thuseethan | Deakin University | Australia

Transfer learning is a recently emerged machine learning paradigm, where the knowledge learned while solving a problem is appropriately reused in a set of related tasks. It is been extensively utilized in the area of computer vision and image classification. Using the knowledge learned during the recognition of vehicles in recognizing different kinds of airplanes is an example of transfer learning. Recent research manifests that transfer learning between different domains can also obtain benchmark results. For instance, the models trained to detect a variety of real-world objects (i.e., jellyfish, slug, and crane) are effective to be transfer-learned in affect recognition.

The transfer learning of the neural network concept was first explicitly addressed by Stevo Bozinovski and Ante Fulgosi in 1976 [1]. In this paper, the transfer learning technique is described as a geometrical and mathematical model, which is later used to implement transfer learning applications. As an important milestone, in NeuRIPS 2016 conference, one of the widely popular computer scientists Andrew Ng said that transfer learning is going to be the next driver for the commercial success of machine learning, after supervised learning. Since then, many machine learning researchers have realized the importance of transfer learning and its applicability in regression, classification, and clustering tasks [2].

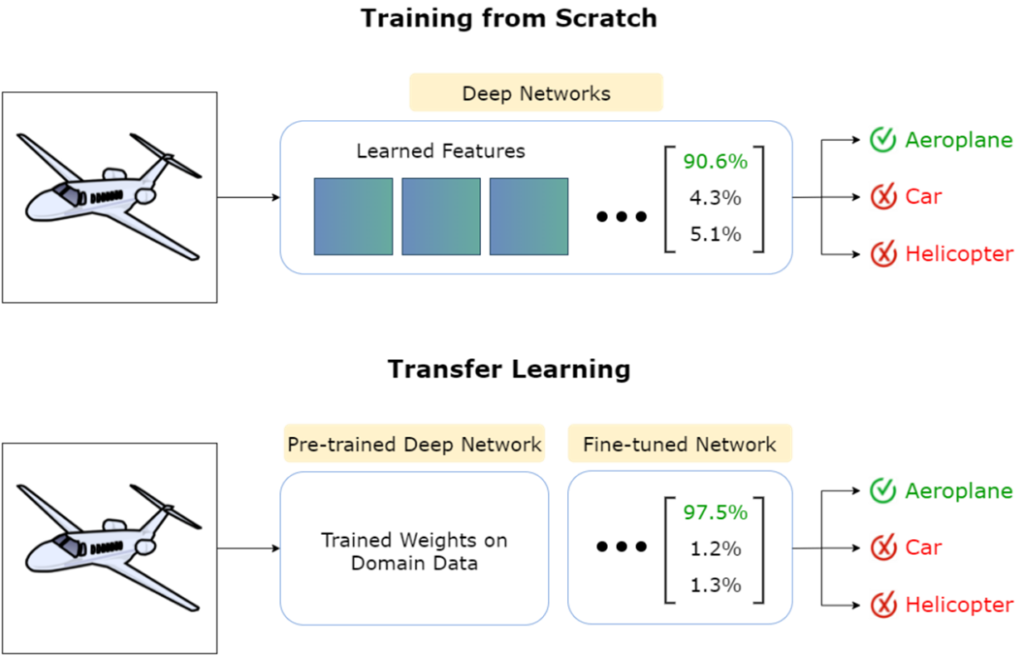

Unlike other traditional machine learning models like training from scratch, transfer learning not only requires less training data but is also faster and more efficient. Another key advantage of transfer learning is that the researchers do not need to build the deep networks from the scratch, which is often a time-consuming task. Transfer learning is a two-step process involving pre-training and fine-tuning. Figure 1 compares the transfer learning and the training from scratch pipelines for a classification task. As shown in the figure, the deep network is trained on the domain data and then fine-tuned on the target data to perform the classification task.

Figure 1: Comparison of training from scratch and transfer learning pipelines

Further, three important questions need to be considered while performing transfer learning: what, when, and how to transfer? The first and essential question is which portion of the knowledge learned from the domain needs to be transferred to the source in order to enhance the model performance. In doing this, the common knowledge in both domain and source is fetched. The second and most important question is at which point the knowledge needs to be transferred. More importantly, researchers should decide when the knowledge transfer should not be done as it oftentimes tends to degrade the target’s performance. Finally, the required changes to the algorithms must be addressed carefully.

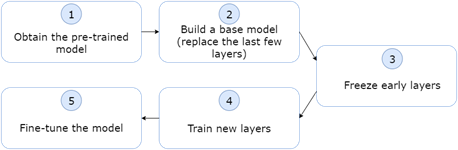

Having these three questions in mind, the real-world implementation of the transfer learning is carried out in a 5-step process as shown in Figure 2. Obtaining a suitable pre-trained model is the first and foremost step in the transfer learning scheme. The researchers who perform transfer learning-based image classification tasks often use various state-of-the-art deep networks with pre-trained weights of the ImageNet dataset. The VGG variants, Inception V3, XCeption, ResNet50 are some popular deep networks used as pre-trained models for computer vision problems. Once the model is selected, the last few layers need to be replaced by new layer configurations. These new layers are then trained using the target data while freezing the early layers of the model. As the final step, the model hyper-parameters are fine-tuned until the best model is achieved.

Figure 2: Implementation steps of transfer learning

Both Tensorflow and PyTorch machine learning frameworks extensively support the transfer learning of neural networks.

For example, a state-of-the-art model can be loaded with ImageNet weights as given below:

model = tensorflow.keras.applications.MODULES( …,

…,

weights='imagenet')where the MODULES can be replaced by the keywords (e.g., densenet, efficientnet, mobilenet_v2, etc.) to load the specific models.

In summary, transfer learning has become a prominent machine learning model that has shown significant improvements

in many areas including computer vision. It is perhaps known as the next driver for the industrial success of machine

learning and deep learning techniques.

References

[1] S. Bozinovski and A. Fulgosi, 1976. The influence of pattern similarity and transfer learning upon training of a base

perceptron b2. In Proceedings of Symposium Informatica (pp. 3-121).

[2] S. J. Pan and Q. Yang, 2009. A survey on transfer learning. IEEE Transactions on knowledge and data engineering,

22(10), pp.1345-1359.